Articles & Blogs

Penetration Testing: Search Engine based Reconnaissance

Written by Vivek Jaiswal II

Reconnaissance is an essential phase in Penetration Testing, before actively testing targets for vulnerabilities.

It helps you widen the scope & attack surface and helps uncover potential vulnerabilities. There are already multiple open-source and proprietary automated tools available in the market to perform reconnaissance or scan any host/application for vulnerabilities, while penetration testing. However, the manual and professional approach is what gives you the actual understanding of the backend technology, it’s workflow, and helps you uncover potential vulnerabilities.

A basic reconnaissance includes:

In this article, I will demonstrate how a simple Search Engine based Reconnaissance helped me identify a potential security vulnerability that leads to dumping the entire database – SQLi

While I was recently working on an External Network Penetration Testing project, as usual, I started with the basic reconnaissance approach.

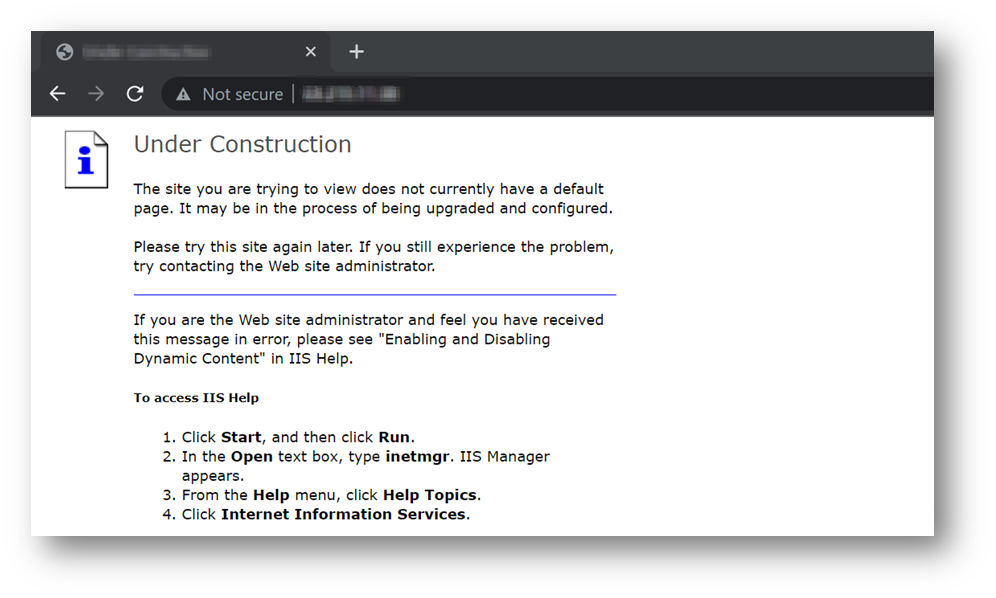

Now, for a Network Penetration Testing activity, I started with the basic port scan and services enumeration. Once the scans were complete, I found the 80/TCP port open which is an HTTP webpage. I then quickly visited the site and found that it did not have any feature or functionality and was only a static error page.

After this, I started performing some directory brute forcing using a common wordlist of directories. You can find the payload list here.

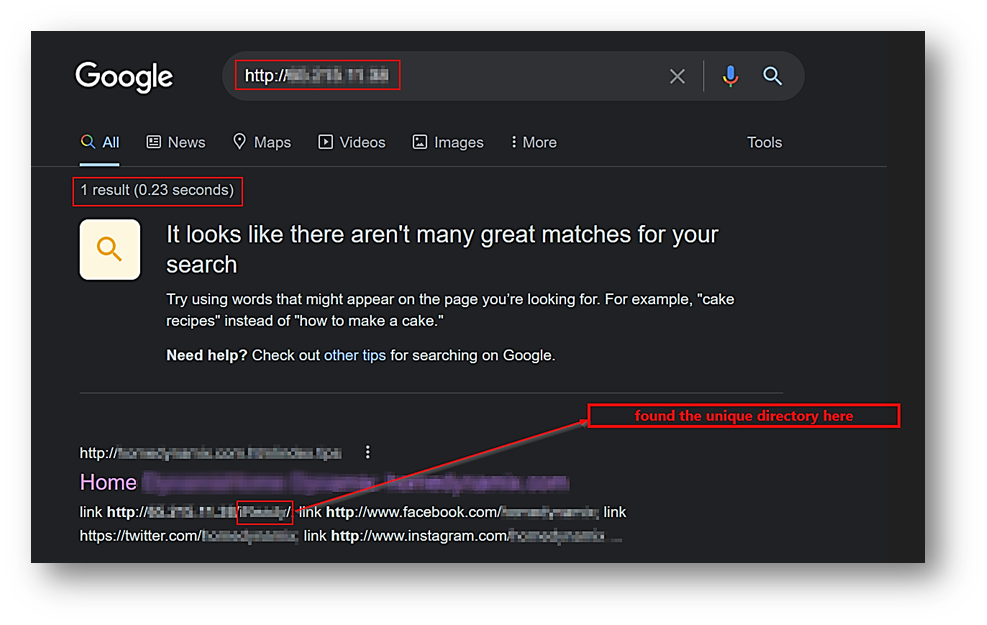

I couldn’t find any valid directory or any entry point, on trying the common directory wordlist. I then proceeded with another reconnaissance approach which was the Search Engine Based Information Discovery.

Tip: It is always better to use custom directory names wherever possible, as they are difficult to guess and brute force.

First, what is Search Engine based discovery or reconnaissance in penetration testing?

Search Engine based reconnaissance in simple terms is a method to extract all the information which are publicly available on the internet in the databases of various search engine(s).

Basically, all search engines work in an automated fashion where they use software known as web crawlers that explore the web regularly to find pages to add to their indexes. In fact, the vast majority of pages listed in our results aren’t manually submitted for inclusion but are found and added automatically when the web crawlers explore the web.

While I was trying to enumerate via Search Engine discovery, looking for information publicly disclosed over the Internet, I came across a very interesting directory. In this article, I will call it “/unique_directory”.

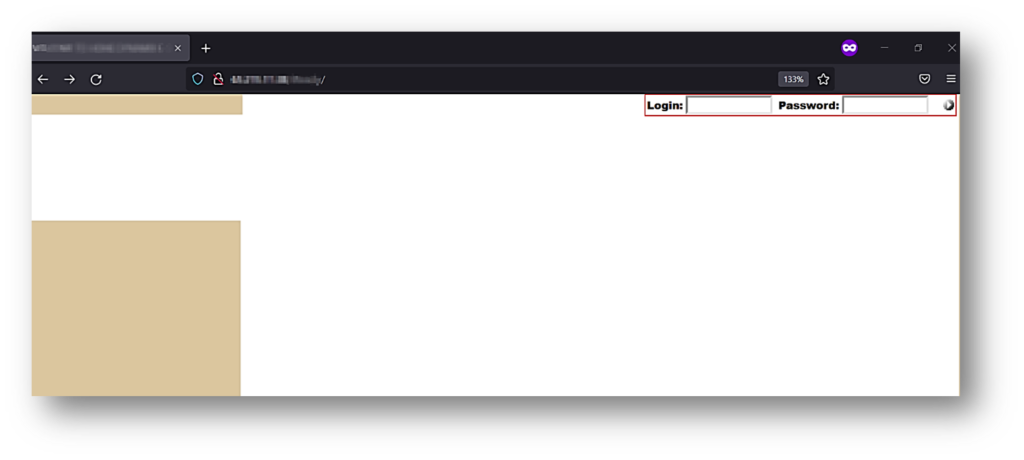

I quickly accessed the URL (http://example.com/unique_directory) and found a simple login page.

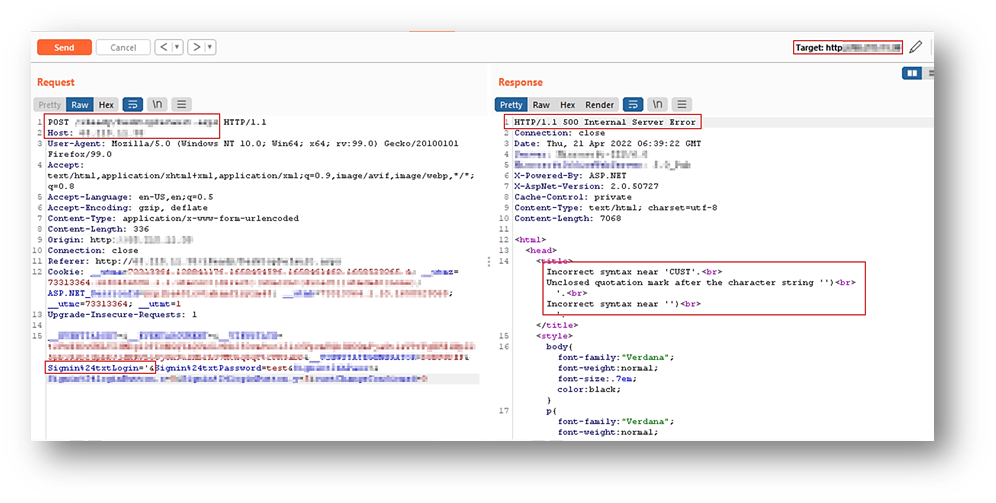

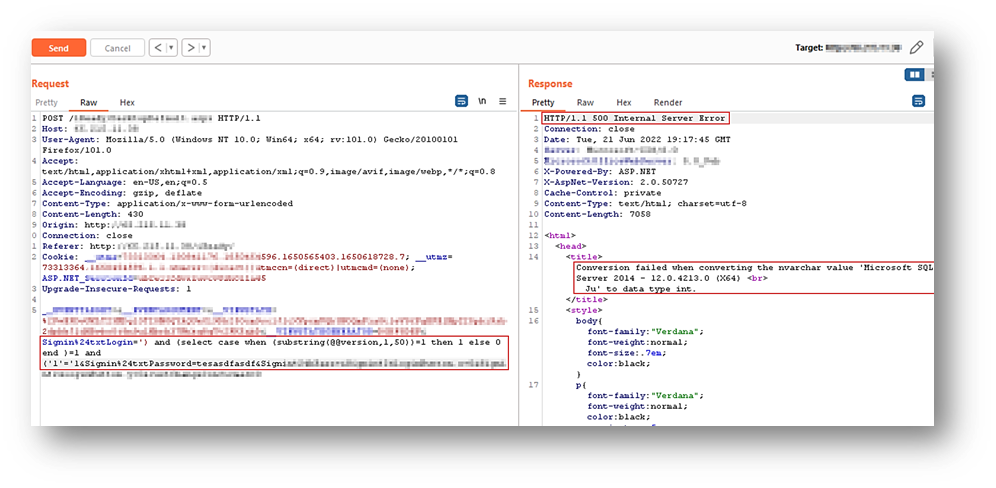

I then started to poke the login page to find weaknesses that I could leverage and, in the process, I sent a single quote (‘) as the username. The server responded with an error page, and the error message indicated that there was an unclosed quotation mark, as the addition of the single quote made the backend query syntactically incorrect.

this behavior and the error message from the server,is an indication of a possible SQLi.

After numerous attempts of carefully crafting and recrafting payloads, it was observed that the servers revealed the backend database information in the error message, which confirmed the presence of a SQLi vulnerability and also the database server used in the backend.

Payload used: ‘) and (select CASE WHEN (substring(@@version,1,50))=1 THEN 1 ELSE 0 END )=1 and (‘1’=’1

The CASE statement goes through conditions and returns a value when the first condition is met (similar to an if-then-else statement). So, once a condition is true, it will stop reading and return the result. If no conditions are true, it returns the value in the ELSE clause. You can find details of the syntax here.

As part of your vulnerability or security management strategy, you need to continuously identify and remediate vulnerabilities in every small, medium, and large business application, because there is never a one-time or one-patch solution for vulnerabilities. The business applications, hosts, assets, and every single piece of information which are posted online need to be audited and monitored in a regular and timely fashion.

Hence, the best recommendation to overcome such scenarios is to carefully consider the sensitivity of design and configuration information before it is posted online and to periodically scan them.

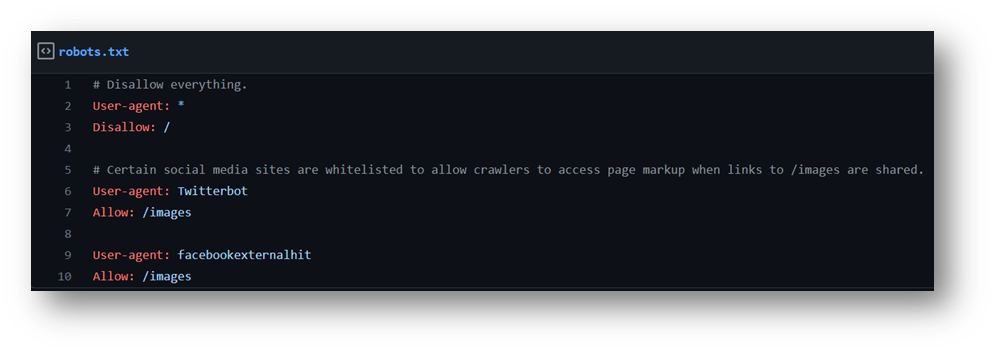

Sometimes, the robots.txt files also work efficiently in preventing sensitive pages, directories, or information from being automatically crawled by search engines and getting stored in their databases.

A robots.txt file tells search engine crawlers which URLs the crawler can access on your site. This is used mainly to avoid overloading your site with requests. However, it is still not a fool proof solution.

One can also Block Search indexing with noindex: A noindex meta tag or header can be added to the HTTP response to prevent a website or other resource from showing up in Google search. Regardless of whether other websites link to it, the page will be completely removed from Google Search results when Googlebot crawls it again and notices the tag or header.

Similarly, we can also temporarily block search results from your site or manage safe search filtering.

Details on this can be found here.

Every piece of information available on the internet could be sensitive and can be a potential entry point for attackers, which could later be escalated to dump the entire database.

Even if you are unintentionally exposing the sensitive information, it will still be crawled through and will be indexed into the search engine Databases, which an attacker can easily extract and enumerate via search engine based discovery or Google dorking.

Useful Tip: While performing search engine based reconnaissance, do not limit testing to just one search engine provider, as different search engines may generate different results. Search engine results can vary in a few ways, depending on when the engine last crawled content, and the algorithm the engine uses to determine relevant pages.

Every small, medium, and large application, host, API, or any piece of information posted online should be thoroughly examined. It is highly recommended to review the sensitivity of the online information on current designs and configurations, on a regular basis.

6,Alvin Ct, East Brunswick, NJ 08816 USA

Toronto

Ground Floor,11, Brigade Terraces, Cambridge Rd, Halasuru, Udani Layout, Bengaluru, Karnataka 560008, India

Role Summary:

As a wordpress developer, you would be responsible for the development, maintenance, and optimization of our company’s website. This role requires a strong understanding of web development technologies, content management systems, and a keen eye for design and user experience. You will play a crucial role in enhancing our online presence and ensuring a seamless user experience for our audience.

Mandatory Skill Sets/Expertise:

Key Attributes:

Key Responsibilities:

Role Summary

The senior manager is responsible for leading and sustaining the team that drives the compliance strategy by working collaboratively with internal teams, SMEs, external customers, vendors, auditors and other stakeholders. He/she should be able to work collaboratively with other departments and stakeholders to achieve company-wide goals and satisfy the client.

Mandatory Skill Sets/Expertise

Key Attributes

Key Responsibilities

Role Summary:

The Sr. Security Consultant / Team Leader is responsible for leading a team of Security Consultants, managing team and professional development goals, ensuring on-time delivery of GRC projects, and providing expert guidance to team members on GRC-related matters.

Mandatory Skill Sets/Expertise

Key Attributes:

Key Responsibilities:

Role Summary

Accorian is actively seeking for a dynamic and seasoned Consultant/Senior Consultant specializing in

the Governance, Risk, and Compliance (GRC) domain to join our team. The preferred candidate will

assume a pivotal role in providing strategic guidance to clients regarding the implementation of sound

risk management practices overseeing vendor relationships, conducting audits across various

frameworks, and harnessing the capabilities of the GRC tool. This multifaceted position necessitates a

profound comprehension of GRC principles, regulatory compliance, and the ability to implement

resilient risk management strategies. Collaborate extensively with cross-functional teams, conduct

evaluations of organizational processes, and deliver strategic recommendations aimed at enhancing

GRC frameworks.

Mandatory Skill Sets/Expertise

1. Bachelor’s or master’s degree in computer science, Information Security, Risk Management,

Cyberlaw, or a related field.

2. Proficient in leading GRC frameworks and methodologies (SOC, ISO 27001, HIPPA, NIST, PCI DSS,

etc.).

3. Strong understanding of cybersecurity frameworks and principles (e.g., NIST Cybersecurity

Framework, ISO 27001, HIPAA).

4. Familiarity with audit processes, internal controls, and assurance methodologies.

5. Experience with GRC software solutions (e.g. SAP GRC, ACL, Archer, etc.).

6. Knowledge of risk assessment, control design, and reporting methodologies

7. Ability to work independently and as part of a team.

Preferred Certifications: CISSP, ISO 27001 Lead Auditor or Implementer.

Key Attributes:

a. 1 – 3 years of experience in consulting or industry roles related to GRC.

b. Proven track record of successful GRC program implementation and optimization.

c. Customer-centric mindset with a focus on understanding and addressing clients’ unique needs.

d. Ability to translate complex technical concepts into clear and actionable insights for clients.

e. Passion for staying up to date on the latest trends and developments in GRC.

f. Strong work ethic and a commitment to delivering high-quality results.

g. Add on Strong project management skills to successfully lead and execute projects.

Key Responsibilities:

1. Assessing cybersecurity risks and vulnerabilities and partnering with the security team to identify

and analyze potential threats, evaluating their impact and likelihood of occurrence.

2. Developing and implementing GRC strategies to analyze industry regulations and standards

relevant to cybersecurity, translating them into practical policies and procedures for our

organization.

3. Conducting GRC audits and collaborating and performing regular evaluations of our security

controls and compliance measures, identifying gaps, and recommending improvements.

4. Collaborating with strategic-integration and fostering strong relationships across various

departments (IT, security, legal, compliance) to ensure seamless integration of GRC processes and

cybersecurity initiatives.

5. Provide expert advice on GRC strategies, frameworks, and methodologies to clients.

6. Collaborate with internal and external auditors, providing necessary documentation and support

for audits.

7. Ensure clients’ adherence to relevant regulations, standards, and industry-specific compliance

requirements.

8. Developing and delivering training programs to educate employees on cybersecurity best

practices and compliance requirements, promoting a culture of security awareness.

9. Staying informed on emerging threats and regulations and continuously update your knowledge

on the evolving cybersecurity landscape and adapt your strategies accordingly

Role Summary

The role of the Project Manager would be to help plan and track cybersecurity & technology projects to meet KRAs, milestones & deadlines. This would also include coordinating. meetings with clients & internal teams and resource planning. The primary KRA would be to ensure projects are delivered on a timely manner and all stakeholders. are updated about the latest status of the project.

Mandatory Skill Sets/Expertise

Key Attributes

Key Responsibilities: –

Role Summary:

As the Lead Software Architect, you will be a key leader in the development and evolution of our B2B SaaS product & it’s associated systems. The entire development team will report to you.

Drawing upon your extensive technical expertise and industry knowledge, you will design and implement scalable, robust, and efficient software solutions. Collaboration with cross-functional teams, technical leadership, and staying abreast of emerging technologies will be essential for success in this role.

About Our Product:

GORICO was incepted by security practitioners & audits with decades of experience to simplify the security conundrum and streamline the security compliance and security maintenance process. Security is a growth enabler today as it’s table stakes for doing business and a mandatory requirement as part of the third-party risk strategy of clients.

This is a single solution tailored to an organization to manage all your security adherence, compliance, vendor risk, vulnerability management , policy & procedure management and risk assessment needs.

It enables behaviour change and shift in the mindset towards security. It’s the only way to address the current reactive approach to it. Hence, going from a once a year or, couple of times a year audit rush to year long sustenance of security.

Hence, GORICO empowers organizations to understand, attain and sustain true security.

Mandatory Skill Sets/Expertise

Key Attributes

Key Responsibilities

Role Summary:

As an IT Engineer, you will play a pivotal role in providing technical support to our internal members and ensuring the smooth operation of our IT environment. You will work under the guidance of IT Manager to resolve technical issues, help end-users, and contribute to the overall success of our IT support team

Mandatory Skill Sets/Expertise

Key Responsibilities

Role Summary

As a developer, you will be responsible for designing, coding, testing, modifying and implementing new features in the B2B SaaS product. You would closely work with the technical architect towards building a scalable GRC tool.

Mandatory Skill Sets/Expertise

Key Attributes

Key Responsibilities

Role Summary

As a Content Strategist & Editor, you would be responsible for strategizing and creating high quality, engaging and informative content including blog spots, articles, product/service descriptions and other forms of digital and print media. You would be responsible for enhancing the brand awareness of Accorian whereby contributing towards lead generation from prospective customers.

Mandatory Skill Sets/Expertise

Key Responsibilities

Drop your CVs to joinourteam@accorian.com

Interested Position